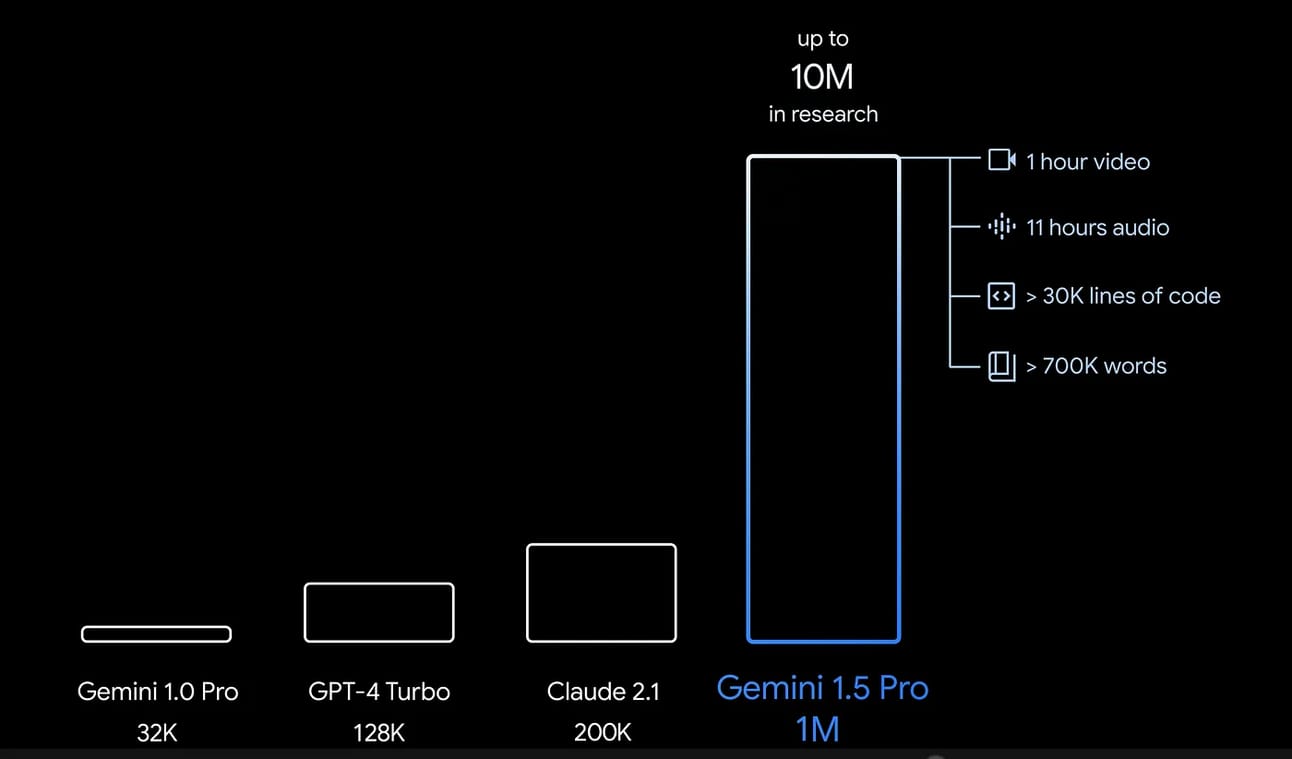

Earlier this year, Google unveiled Gemini 1.5 Pro, a multimodal model with a groundbreaking 1 million token context window, far surpassing the 200,000-token capacity of its nearest competitors. This leap enables the model to handle much larger text corpora, setting a new standard for large-scale language processing.

Other major models are also expanding their context windows:

Anthropic's Claude: Increased its context window to 200,000 tokens, making it a strong competitor for tasks requiring longer input sequences.

OpenAI's GPT-4: Released versions supporting up to 128,000 tokens, offering a significant improvement over earlier iterations.

With tokens being the smallest units recognized by the model (an English word averages about 2 tokens – see https://platform.openai.com/tokenizer), Gemini 1.5 Pro can process approximately 500,000 words in a single context. This allows for powerful use cases, such as:

Financial analysts processing decades' worth of fiscal reports to identify trends and compare data across years.

Querying any detail from the entire "Lord of the Rings" trilogy (about 450,000 words) in one pass.

Despite the excitement (and perhaps eased workload for high school students), context windows aren’t infinitely scalable. As they grow, practical limitations make them harder to implement and prevent widespread adoption.

Book reports just got a whole lot easier!

Limitations and Challenges

Despite the excitement around large-context models, their adoption is limited by significant challenges. Both Google and Anthropic have restricted access to their advanced models, like the 1 million token Gemini 1.5, to a select group of testers, with even tighter controls on the more ambitious 10 million token versions. This cautious release reflects the underlying difficulties—particularly the immense processing demands that push hardware, like Google’s Tensor Processing Units (TPUs), to their thermal limits (https://blog.google/technology/ai/long-context-window-ai-models/). It underscores that integrating and practically using these models is more complex than it seems.

In today’s landscape of large language models, companies like Google have moved toward a closed-source approach, keeping model training details confidential. This is a shift from Google’s earlier openness with breakthroughs like Transformer and BERT. Information on the advancements enabling models like Gemini 1.5 Pro to handle up to 10 million tokens is limited, with only vague references to "significant architecture changes" in technical reports (Gemini 1.5 Technical Report).

Public demos of Gemini 1.5, (https://www.youtube.com/watch?v=SSnsmqIj1MI&ab_channel=Google) show lengthy inference times, averaging 60 seconds per output, and Google’s vague blog updates suggest limited progress in managing large-context models efficiently. While the foundational research is public, the specific innovations remain undisclosed. In the following sections, we'll explore possible innovations and explain why increasing context length alone may not solve all the challenges of large-scale text processing.

Alternative Approaches to Handling Large Text Corpora

Beyond large-context models, two main methods are used to analyze extensive text:

Retrieval-Augmented Generation (RAG)

Fine-Tuning

Retrieval-Augmented Generation (RAG)

RAG retrieves relevant sections of a large text corpus based on a user's query and integrates this information into the model's response. This approach:

Streamlines Processing: Only relevant information is processed, rather than feeding the entire corpus into the model.

Keeps Models Current: The retrieval database can be updated independently, avoiding the need to retrain the model.

Enables Flexibility: Outdated or unwanted sources can be removed from the retrieval system, unlike neural networks that struggle to "forget" learned data (https://blog.research.google/2023/06/announcing-first-machine-unlearning.html).

RAG is used by AI search engines like Bing and Perplexity, utilizing various retrieval methods:

Vector Databases (e.g., Pinecone, ChromaDB) for similarity searches.

Lexical Retrieval (e.g., ElasticSearch) for traditional keyword-based searches, where documents are retrieved based on exact word matches rather than semantic meaning.

Neural Databases (e.g., ThirdAI’s NeuralDB) that combine both lexical and semantic retrieval for efficient querying.

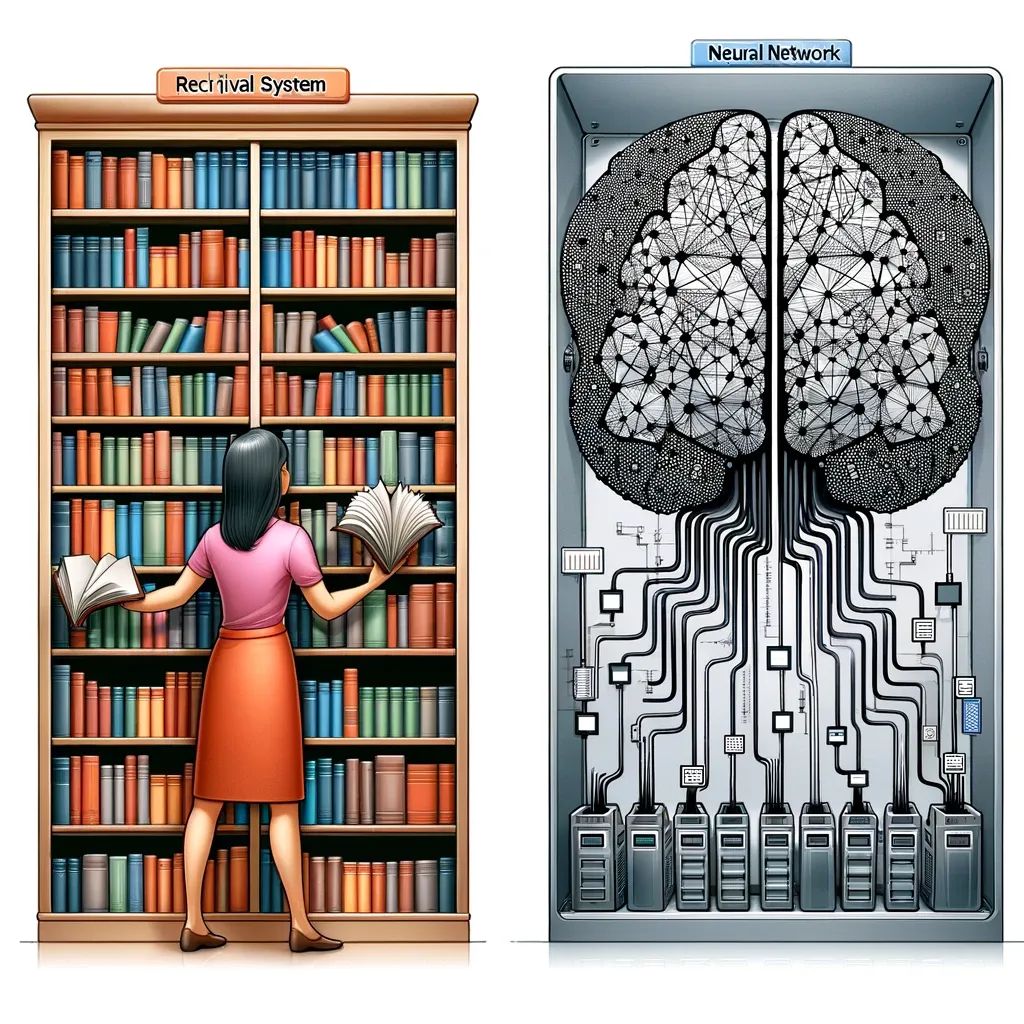

A major benefit of decoupling retrieval from generation is the ability to update the retrieval database independently, keeping the model current without retraining. Retrieval systems are also more computationally efficient than feeding entire text corpora into a model or fine-tuning it. This makes them ideal for data-sensitive applications, as they can be hosted on-premises without external network calls. Additionally, they offer flexibility in removing outdated or unwanted information—something neural networks struggle with, as they cannot easily "forget" learned data.

Retrieval system's ability to update and remove outdated information, compared to the more static nature of neural networks

Fine-tuning

Fine-tuning adapts a language model to specific text collections, embedding this information directly into the model's weights. This removes the need to input reference text into the context window, but it comes with challenges:

High Computational Costs: Fine-tuning requires substantial computational resources, making it impractical for many users.

Difficulty in Updating: Once a model is fine-tuned, updating or removing specific information is cumbersome.

Risk of Hallucinations: Without direct access to source text, models may generate inaccurate information.

Techniques like LoRA fine-tuning and quantization help reduce computational costs, but they introduce trade-offs in performance and flexibility. Compared to RAG or long-context models, fine-tuned models have a higher risk of hallucinations due to the lack of direct reference material.

Computational Challenges of Large-Context Transformers

Running inference on a vanilla transformer with a large context window presents two major challenges:

Quadratic GPU Memory Usage

Quadratic Computational Complexity

Understanding the Attention Block

In a transformer, the attention mechanism is defined as:

Where:

Q,K,V ∈ ℝn×d

n is the input sequence length

d is the embedding (hidden) dimension

The matrix multiplication (QKT) results in a n × n matrix. Multiplying two matrices requires n2 × 2d floating point operations, not counting:

The subsequent multiplication with V

The feed-forward layers applied after the attention block

Additional transformer blocks: In models like GPT-3, this computation is repeated across 96 sequential blocks, further multiplying the computational cost.

Computational Complexity Example

Consider the GPT-3 architecture with a 1 million token context window:

Transformer Blocks: 96 layers

Hidden Dimension: d = 12,288

Sequence Length: n = 1,000,000 tokens

The computation for a single QKT operation in one block is:

Hardware Limitations

A single NVIDIA H100 PCIe GPU

Cost: $30,000–$50,000

Performance: 51 teraFLOPS (assuming FP32 precision)

Time to Compute one QKT Operation:

Total Inference Time for Large Context GPT-3:

8 minutes/block × 96 blocks = 768 minutes ≈ 12.8 hours

Even with optimizations (parallelizing heads, efficient matrix multiplication, reducing hidden dimensions), processing such long sequences remains computationally prohibitive.

Memory Bottleneck of the KV Cache

Modern transformer-based LLMs are autoregressive:

Naïve Approach: For generating each token, reprocess the entire sequence (O(n2) per token).

Optimized Approach: Cache key (K) and value (V) matrices to avoid redundant computations.

Caching Mechanism

Computational Complexity Per New Token:

Operations: n × 2d (note: w/ caching n is now linear)

Benefit: Avoids Recalculating QKT for all n tokens (O(n2))

Memory Requirements

For n = 1,000,000 tokens, per transformer block:

Total for 96 blocks:

98.3 GB/block × 96 blocks = 9,436.8 GB ≈ 9.4 TB

Challenges:

An NVIDIA H100 GPU has 80 GB of memory.

Even distributing across multiple GPUs doesn't fully solve the problem. Transformer blocks must be processed sequentially, as each block's output is needed before the next can proceed, limiting full parallelization.

Improvements for Long Context Transformers

Advances in transformer architecture and optimization techniques are tackling the computational and memory challenges of long sequences. Below are key methods that enhance efficiency and scalability for handling extensive text.

Flash Attention

Flash Attention is a technique that optimizes the computation of the attention mechanism to reduce memory usage and increase speed.

Problem Addressed: In vanilla transformers, computing attention scores requires the full n × n attention matrix, leading to quadratic memory consumption.

Key Idea: Flash Attention introduces a custom GPU kernel that computes attention without materializing large intermediate matrices.

How It Works:

Block-wise Computation: Processes the attention mechanism in smaller, more manageable blocks.

Fused Operations: Combines softmax calculation and value matrix multiplication into a single operation.

Online Softmax: Computes softmax on the fly, avoiding the need to store the full attention matrix.

Benefits:

Reduced Memory Usage: Lowers memory requirements from O(n2) to O(n) based on sequence length.

Increased Speed: Improves computational efficiency through optimized GPU usage and reduced data transfers.

Quantization

Quantization reduces the numerical precision of model parameters and activations to decrease memory usage and computational demands.

Problem Addressed: High-precision representations (e.g., 32-bit floating-point) consume significant memory and slow down computations.

Key Idea: Use lower-precision formats (e.g., 16-bit floating-point or 8-bit integers) for storing and computing model data.

How It Works:

Parameter Quantization: Converts model weights and biases to lower-precision formats.

Activation Quantization: Applies lower precision to intermediate computations, including the KV cache.

Quantization-Aware Techniques: Employs strategies during training to maintain model accuracy despite reduced precision.

Benefits:

Memory Savings: Reduces memory required for parameters and activations.

Faster Computations: Less intensive arithmetic speeds up processing.

Larger Models: Enables bigger models or longer sequences on the same hardware.

Conclusion

While Google's Gemini 1.5 Pro highlights the potential of large-context transformers, scaling context windows remains constrained by computational demands, memory bottlenecks, and hardware limitations.

Computational Cost: Without KV caching, the cost is quadratic; with caching, they become linear for generating new tokens.

Memory Bottleneck: Storing K and V caches for long sequences exceeds current GPU capacity.

Techniques like Flash Attention, quantization, and optimized KV caching provide meaningful improvements but fall short of fully addressing these challenges.

Alternative approaches, such as Retrieval-Augmented Generation (RAG) and fine-tuning, offer practical solutions by balancing efficiency and scalability. Until transformative breakthroughs in architecture and hardware emerge, hybrid methods combining retrieval systems with fine-tuned models will remain the most viable option for large-scale text processing.